Hackers are Using AI Tools such as ChatGPT for Malware Development

There are many benefits of using AI in healthcare, including the acceleration of drug development and medical image analysis, but the same AI systems that benefit healthcare could also be used for malicious purposes such as malware development. The Health Sector Cybersecurity Coordination Center (HC3) recently published an analyst note summarizing the potential for artificial intelligence tools to be used by hackers for this purpose and evidence is mounting that AI tools are already being abused.

AI systems have evolved to a stage where they can be used to write human-like text with a very high degree of fluency and creativity, including valid computer code. One AI tool that has proven popular in recent weeks is ChatGPT. The OpenAI-developed chatbot is capable of producing human-like text in response to queries and had more than 1 million users in December. The tool has been used for a myriad of purposes, including writing poems, songs, reports, web content, and emails, and successfully passed the Medical Licensing and Bar examinations.

In response to the incredible popularity of ChatGPT, security researchers started testing its capabilities to determine how easily the tool could be used for malicious purposes. Multiple security researchers found that despite the terms of use prohibiting the chatbot from being used for potentially harmful purposes, they were able to get it to craft convincing phishing emails, devoid of the spelling mistakes and grammatical errors that are often found in these emails. ChatGPT and other AI tools could be used for phishing and social engineering, opening up these attacks to a much broader range of individuals while also helping to increase the effectiveness of these attacks.

One of the biggest concerns is the use of AI tools to accelerate malware development. Researchers at IBM developed an AI-based tool to demonstrate the potential of AI to be used to power a new breed of malware. The tool, dubbed DeepLocker, incorporates a range of ultra-targeted and evasive attack tools that allow the malware to conceal its intent until it reaches a specific victim. The malicious actions are then unleashed when the AI model identifies the target through indicators like facial recognition, geolocation, and voice recognition.

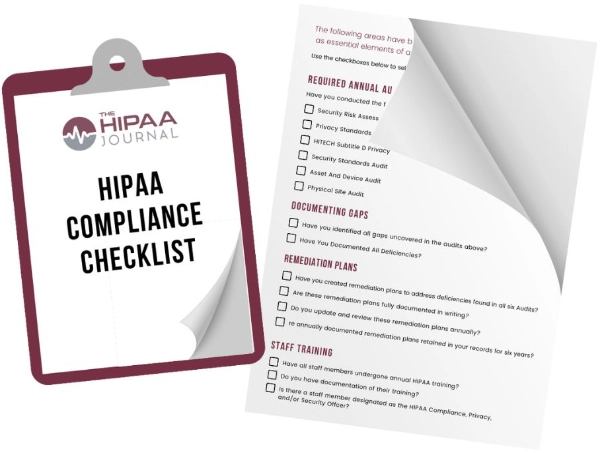

Get The FREE

HIPAA Compliance Checklist

Immediate Delivery of Checklist Link To Your Email Address

Please Enter Correct Email Address

Your Privacy Respected

HIPAA Journal Privacy Policy

If you ask ChatGPT to write a phishing email or generate malware, the request will be refused as it violates the terms and conditions, but it is possible to make seemingly innocuous requests and achieve those aims. Researchers at Check Point showed it was possible to create a full infection flow using ChatGPT. They used ChatGPT to craft a convincing phishing email impersonating a hosting company for delivering a malicious payload, used OpenAI’s code-writing system, Codex, to create VBA code to add to an Excel attachment, and also used Codex to create a fully functional reverse shell. “Threat actors with very low technical knowledge — up to zero tech knowledge — could be able to create malicious tools [using ChatGPT]. It could also make the day-to-day operations of sophisticated cybercriminals much more efficient and easier – like creating different parts of the infection chain,” said Sergey Shykevich, Threat Intelligence Group Manager at Check Point.

Hackers are already leveraging OpenAI code to develop malware. One hacker used the OpenAI tool to write a Python multi-layer encryption/decryption script that could be used as ransomware and another created an information-stealer capable of searching for, copying, compressing, and exfiltrating sensitive information. While there are many benefits of AI systems, these tools will inevitably be used for malicious purposes. Currently, the cybersecurity community has yet to develop mitigations or a way to defend against the use of these tools for creating malware, and it may not even be possible to prevent the abuse of these tools.