Mandiant: Malicious Actors Use of Generative AI Remains Limited

There is justifiable fear that malicious actors will leverage generative AI to facilitate their malicious activities; however, the adoption of generative AI by threat actors appears to be limited, certainly for intrusion operations. Mandiant reports that it has been tracking threat actor interest in generative AI, but its research and open source accounts indicate generative AI is only currently being used to a significant extent for social engineering and misinformation campaigns.

Mandiant has found evidence indicating generative AI is being used to create convincing lures for phishing and business email compromise (BEC) attacks. Malicious actors can create text output reflecting natural human speech patterns for phishing lures and enhance the complexity of language in their existing operations. Threat actors have used generative AI to manipulate video and voice content in BEC scams and to manipulate images to defeat know-your-customer (KYC) requirements. Evidence has also been obtained indicating financially motivated threat actors are using the malicious WormGPT tool to create convincing phishing and BEC lures.

Mandiant has previously demonstrated how malicious actors can use AI-based tools to support their operations, such as for processing open source information and stolen data for reconnaissance purposes. For example, state-sponsored intelligence services can use machine learning and data science tools on massive quantities of stolen and open-source data to improve data processing and analysis, improving the speed and efficiency of operationalizing collected information. In 2016, a system was demonstrated that can identify high-value targets from previous Twitter activity and generate convincing lures targeting individuals based on past tweets. Mandiant has also found evidence indicating a North Korean cyber espionage actor (APT43) has an interest in large language models (LLMs) and is using LLM tools, although it has yet to be established why the LLMs are being used.

Currently, one of the most effective uses of generative AI is for information operations. AI tools help information operation actors with limited resources and capabilities produce higher quality content at scale, and the tools increase their ability to create content that may have a stronger persuasive effect on their targeted audiences than was previously possible. “We believe that AI-generated images and videos are most likely to be employed in the near term; and while we have not yet observed operations using LLMs, we anticipate that their potential applications could lead to their rapid adoption,” suggest the researchers.

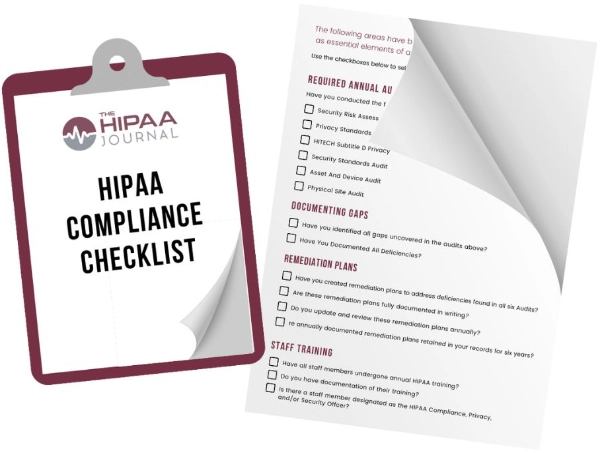

Get The FREE

HIPAA Compliance Checklist

Immediate Delivery of Checklist Link To Your Email Address

Please Enter Correct Email Address

Your Privacy Respected

HIPAA Journal Privacy Policy

While there is limited evidence of threat actors leveraging LLMs for creating new malware and improving existing malware, this is an area that is expected to see significant growth. Mandiant reports that several threat actors are advertising services on underground forums on how to bypass restrictions on LLMs to get them to assist with malware development.

“While we expect the adversary to make use of generative AI, and there are already adversaries doing so, adoption is still limited and primarily focused on social engineering,” John Hultquist, Chief Analyst, Mandiant Intelligence, Google Cloud told The HIPAA Journal. “There’s no doubt that criminals and state actors will find value in this technology, but many estimates of how this tool will be used are speculative and not grounded in observation.”

While threat actors are expected to increasingly use generative AI for offensive purposes, AI-based tools currently offer far more benefits to defenders. “AI has been around for a while, but this is the inflection point where the general public has taken notice. Like any technological innovation, we expect adversaries are going to find applications for these tools. However, there is far greater promise for defenders who have the ability to direct the development of it,” said Sandra Joyce, VP, Mandiant Intelligence, Google Cloud. “We still own the technology. There are going to be people who will use AI for ill intent, but that shouldn’t stop us from leapfrogging ahead to out innovate the adversaries.”